Today, I compared three different prediction methods, one peak prediction, two peaks prediction and 32 bins prediction. I found that the best is 32 bins prediction.

Summary:

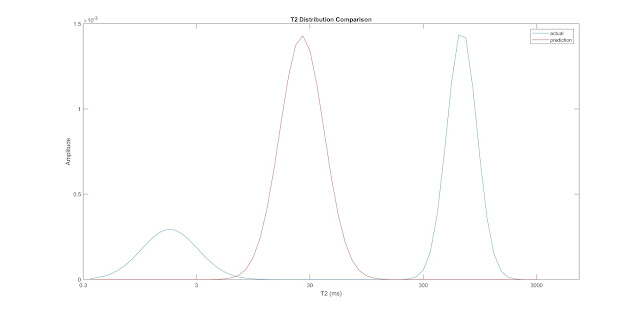

The following is the one peak prediction random result

The second is the two peaks random result

The third is the 32 bins random result (there are 64 bins in total, for every two, I get the mean value of them and decrease the number of outputs from 64 to 32. I predict 32 outputs at the same time.)

In comparison, the 32 bins result is the best especially for the first hundreds of depths. The following is the first 20 depths and they show good fitting performance.

The following is the R2 distribution of 32 bins prediction

It shows a better concentration of high R2 than before.

Next week, I will try to combine the 32 bins prediction with the 6 parameter prediction. Maybe the combination of them will get better results.

3/31/2017

3/30/2017

Change the training function and know how to set initial weights and biases

Today, I found how to set initial weights and biases. In addition, I changed my training function from LM (Levenberg-Marquardt) to SCG (scaled conjugate gradient). Now the SCG training function can do iterations faster than LM.

Summary:

Today, I found how to set initial weights and biases in the neural network model. I have tried various initial weights and biases but get similar results.

By the way, I change my training function to get a new one so that my neural network model can do iterations faster than before.

The following are results I obtain today.

The first figure shows the R of training data (90% randomly) and testing data (10%).

The second and third and prediction performance, which are not very good.

Tomorrow, I will try to find more ways to improve the model.

Summary:

Today, I found how to set initial weights and biases in the neural network model. I have tried various initial weights and biases but get similar results.

By the way, I change my training function to get a new one so that my neural network model can do iterations faster than before.

The following are results I obtain today.

The first figure shows the R of training data (90% randomly) and testing data (10%).

The second and third and prediction performance, which are not very good.

Tomorrow, I will try to find more ways to improve the model.

3/29/2017

Look for source code of neural network toolbox

Today, I tried to find some codes to generate a neural network. This may be helpful because I can learn the structure of the neural network toolbox from them and know how to change them.

Summary:

On Tuesday, I met with Gowtham. However, he just used the most basic neural network model and did not change it at all. So he could not help me.

Today, I look for some source codes online. I want to set different initial weights so that the model could have different results. After comparing them, maybe I can find the best one.

I found one function to generate a complete function result for simulating a neural network including all settings, weight and bias values, module functions, and calculations in one file. However, I have not found how to set the initial weights to get different modeling results.

Tomorrow, I will continue to look for source code of neural network toolbox so that I can learn it better and know how to improve it.

Summary:

On Tuesday, I met with Gowtham. However, he just used the most basic neural network model and did not change it at all. So he could not help me.

Today, I look for some source codes online. I want to set different initial weights so that the model could have different results. After comparing them, maybe I can find the best one.

I found one function to generate a complete function result for simulating a neural network including all settings, weight and bias values, module functions, and calculations in one file. However, I have not found how to set the initial weights to get different modeling results.

Tomorrow, I will continue to look for source code of neural network toolbox so that I can learn it better and know how to improve it.

3/27/2017

Comparison of prediction without validation

Today, I tried to change my ANN model with cancelling validation data. I divided all data just into training and testing data and obtain better results.

Summary:

After running different ANN models with different proportions of training and testing data, I get similar results after comparing them. For every model, I may need to run several times to get a best result because R2 of testing data may be very low sometimes.

But for all best results of different proportions of data division,their performance are all similar and not bad.

The first is the best result of 80% training data and 20% testing data.

The second is the best result of 70% training data and 30% testing data.

Although they perform better than last week's results, it is still not good enough. For the global optimization codes online, I think there are some problems of it. Tomorrow I will discuss it with Gowtham to see if there are any solutions.

Summary:

After running different ANN models with different proportions of training and testing data, I get similar results after comparing them. For every model, I may need to run several times to get a best result because R2 of testing data may be very low sometimes.

But for all best results of different proportions of data division,their performance are all similar and not bad.

The first is the best result of 80% training data and 20% testing data.

The second is the best result of 70% training data and 30% testing data.

Although they perform better than last week's results, it is still not good enough. For the global optimization codes online, I think there are some problems of it. Tomorrow I will discuss it with Gowtham to see if there are any solutions.

3/24/2017

Maybe it is because of local optimization

Today, I prepared for group meeting and discussed with group members of their research. In the evening, I considered about my research. I think it may be the problem of local optimization.

Summary:

When my model does iterations. It usually stopped after 10 or 20 times after validation checks. I think maybe the performance function mse converges to local optimization so that ANN model iterates so few times.

I searched online and found few ways of improve ANN model by adding functions into it so that we can get global optimization.

But I still found one package called particle swarm optimization toolbox. There are instructions about how to add it into neural network toolbox.

However, the instructions are complicated and there are some problems now to accomplish it. So I have not finished it yet. I will try to figure it out at the weekend.

Next week, I will continue to look for ways to improve ANN model performance.

Summary:

When my model does iterations. It usually stopped after 10 or 20 times after validation checks. I think maybe the performance function mse converges to local optimization so that ANN model iterates so few times.

I searched online and found few ways of improve ANN model by adding functions into it so that we can get global optimization.

But I still found one package called particle swarm optimization toolbox. There are instructions about how to add it into neural network toolbox.

However, the instructions are complicated and there are some problems now to accomplish it. So I have not finished it yet. I will try to figure it out at the weekend.

Next week, I will continue to look for ways to improve ANN model performance.

3/23/2017

get the trend of the T2 distribution curve but not accurate

Today, I tried logging data with and without QElan, the results are almost the same. They could fit the trend of T2 distribution but not very accurate. Also, the distribution of R2 did not show concentration at high values.

Summary:

The followings are the results I get today.

They are 32 random depths. The blue lines are original lines and red lines are predicted lines. I have changed every parameters of the model but the prediction did not improve. I have tried every training functions and initial parameters. I even tried every setting of the model. But none of them works.

Finally, I let Hao to help me predict 6 parameters in Python and the results still did not improve. It seems that there is no problem but we cannot get good predictions.

For the last month, I thought I have tried every method I could to improve the model. But it did not show good results. Honestly, I did not know what to do now.

Since I will have a class tomorrow morning, could you please meet me tomorrow after our team meeting? Maybe we could discuss what to do next. I am a little lost now.

Tomorrow, I will review my work this semester and prepare for the presentation.

Summary:

The followings are the results I get today.

They are 32 random depths. The blue lines are original lines and red lines are predicted lines. I have changed every parameters of the model but the prediction did not improve. I have tried every training functions and initial parameters. I even tried every setting of the model. But none of them works.

Finally, I let Hao to help me predict 6 parameters in Python and the results still did not improve. It seems that there is no problem but we cannot get good predictions.

For the last month, I thought I have tried every method I could to improve the model. But it did not show good results. Honestly, I did not know what to do now.

Since I will have a class tomorrow morning, could you please meet me tomorrow after our team meeting? Maybe we could discuss what to do next. I am a little lost now.

Tomorrow, I will review my work this semester and prepare for the presentation.

3/22/2017

select new logging data

Today, I reviewed IP and selected some logging data.

Summary:

These are lithology logging data from QElan. They are almost at the same depth interval as NMR. So they could not be used to predict T2 distribution outside the depth range. However, we can get more QElan data outside the depth range of NMR so that we can predict T2 at other depths.

In addition, we do not need to predict T2 from top to bottom well. We just need to predict T2 at reservoir depths.

1. The logging data I select are as follows:

Summary:

These are lithology logging data from QElan. They are almost at the same depth interval as NMR. So they could not be used to predict T2 distribution outside the depth range. However, we can get more QElan data outside the depth range of NMR so that we can predict T2 at other depths.

In addition, we do not need to predict T2 from top to bottom well. We just need to predict T2 at reservoir depths.

1. The logging data I select are as follows:

| QElan:ILLITE_COMBINER |

| QElan:CHLORITE_COMBINER |

| QElan:BOUND_WATER_COMBINER |

| QElan:QUARTZ_COMBINER |

| QElan:KFELDSPAR_COMBINER |

| QElan:CALCITE_COMBINER |

| QElan:DOLOMITE_COMBINER |

| QElan:ANHYDRITE_COMBINER |

| QElan:UWATER_COMBINER |

| QElan:UOIL_COMBINER |

2. I also picked two wire logging data:

Wire:SW_HILT

|

3. I deleted five logging data from initial 16 logging data because they are highly correlated with other logging data:

SonicScanner:AT20

|

Tomorrow, I will finish the depth match for logging data and T2 distribution parameters data. I will finish predict T2 with new data tomorrow to see if they can be more accurate.

3/21/2017

Plot T2 distribution comparison and R2 distribution

Today, I plot T2 distribution comparison and R2 distribution. As I said, the R2 should be low and T2 distribution comparison results are not good enough.

Summary:

The following are 10 comparison plots. They are selected from 3042 depths of 100th, 200th, 300th, ... , 1000th.

The following is the R2 distribution figure. I just plotted R2 which are from -1 to 1. there are about 1000 depth whose R2 are smaller than -1, some of them are very small (down to -1000) so that I could not put them in one histogram. It is very randomly distributed. There are not many depths whose R2 are close to 1.

The following are figures for biggest peak at every depth.

The first is the alpha and its R value.

The second is the miu and its R value.

The third is the sigma and its R value.

They all perform better than two peaks. But they are still not good enough.

Tomorrow, I will try to select new logging data to improve ANN model prediction performance.

Summary:

The following are 10 comparison plots. They are selected from 3042 depths of 100th, 200th, 300th, ... , 1000th.

The following is the R2 distribution figure. I just plotted R2 which are from -1 to 1. there are about 1000 depth whose R2 are smaller than -1, some of them are very small (down to -1000) so that I could not put them in one histogram. It is very randomly distributed. There are not many depths whose R2 are close to 1.

The following are figures for biggest peak at every depth.

The first is the alpha and its R value.

The second is the miu and its R value.

The third is the sigma and its R value.

They all perform better than two peaks. But they are still not good enough.

Tomorrow, I will try to select new logging data to improve ANN model prediction performance.

3/20/2017

just predict one higher peak and get better performance

Today, I selected one higher peak from two peaks at every depth and accumulate them to build a new output data matrix. As a result, the ANN model performs better than before.

Summary:

The followings are the comparisons of predicting alpha, miu and sigma one by one with three different ANN models and predicting them together with one comprehensive ANN model.

1. alpha

The first is alpha, namely the magnitude of the peak. The first modeling result is from one comprehensive ANN model while the second modeling result is from one model specially built for alpha. It is very obvious that the second one shows better modeling results. So I think we should predict 3 parameters (and later 6 parameters) one by one with different ANN models.

The third is R value for predicting alpha with one major peak. It improves from about 0.5-0.6 to 0.7-0.8 than two peaks.

2. miu

The second is miu, namely the mean location of the peak. The first modeling result is from one comprehensive ANN model while the second modeling result is from one model specially built for miu. These two show similar predicting results.

The third is R value for predicting miu with one major peak. It improves from about 0.5-0.6 to 0.6-0.7 than two peaks.

3. sigma

The third is sigma, namely the deviation of the peak. The first modeling result is from one comprehensive ANN model while the second modeling result is from one model specially built for sigma. These two show similar predicting results. The second one also shows better modeling results.

The third is R value for predicting sigma with one major peak. It improves little than two peaks.

In conclusion:

1. if we select the bigger peak from two peaks and predict it at every depth, it will show better predicting results.

2. if we predict 3 or 6 parameters one by one (predict one parameter at one time, build 3 or 6 ANN models in total), the accuracy of prediction will improve than predicting all parameters at one time (just build one ANN model).

Tomorrow, I will try to improve the accuracy of prediction more.

Summary:

The followings are the comparisons of predicting alpha, miu and sigma one by one with three different ANN models and predicting them together with one comprehensive ANN model.

1. alpha

The first is alpha, namely the magnitude of the peak. The first modeling result is from one comprehensive ANN model while the second modeling result is from one model specially built for alpha. It is very obvious that the second one shows better modeling results. So I think we should predict 3 parameters (and later 6 parameters) one by one with different ANN models.

The third is R value for predicting alpha with one major peak. It improves from about 0.5-0.6 to 0.7-0.8 than two peaks.

2. miu

The second is miu, namely the mean location of the peak. The first modeling result is from one comprehensive ANN model while the second modeling result is from one model specially built for miu. These two show similar predicting results.

The third is R value for predicting miu with one major peak. It improves from about 0.5-0.6 to 0.6-0.7 than two peaks.

3. sigma

The third is sigma, namely the deviation of the peak. The first modeling result is from one comprehensive ANN model while the second modeling result is from one model specially built for sigma. These two show similar predicting results. The second one also shows better modeling results.

The third is R value for predicting sigma with one major peak. It improves little than two peaks.

In conclusion:

1. if we select the bigger peak from two peaks and predict it at every depth, it will show better predicting results.

2. if we predict 3 or 6 parameters one by one (predict one parameter at one time, build 3 or 6 ANN models in total), the accuracy of prediction will improve than predicting all parameters at one time (just build one ANN model).

Tomorrow, I will try to improve the accuracy of prediction more.

3/10/2017

reasons why the prediction is inaccurate

Today, I read the theoretical part of NMR logging and T2 distribution. With the combination of the parameters I get, I made a conclusion which may be helpful for my further research.

Summary:

As we can see in the picture I plot, different combinations of three parameters mean different physical properties underground.

But usually, one parameter should mean one kind of information in most ann models. That is why the performance of ann model is not good enough to predict these parameters from my perspective.

I also try to predict 6 parameters at one time, but since only eight combinations of them have physical meanings, it is still very hard for the ann model to identify them from all of the possible combinations.

What I should do next may be:

1. find more logging data which are related to T2 distribution and they should be normal and cheap, but maybe 16 are enough if I can find other better ways.

2. change the parameters I get into other forms, such as multiply miu and alpha, but the separation of them will be another problem if the prediction results are good.

Next week, I will do as above one by one.

Summary:

As we can see in the picture I plot, different combinations of three parameters mean different physical properties underground.

But usually, one parameter should mean one kind of information in most ann models. That is why the performance of ann model is not good enough to predict these parameters from my perspective.

I also try to predict 6 parameters at one time, but since only eight combinations of them have physical meanings, it is still very hard for the ann model to identify them from all of the possible combinations.

What I should do next may be:

1. find more logging data which are related to T2 distribution and they should be normal and cheap, but maybe 16 are enough if I can find other better ways.

2. change the parameters I get into other forms, such as multiply miu and alpha, but the separation of them will be another problem if the prediction results are good.

Next week, I will do as above one by one.

3/09/2017

verify the problem of my model

Today, I read papers and watched cases of the application of ANN online, I think the problem of my model is not because of the model itself, but because of the data.

Summary:

Today, I first try the methods in papers and online to improve the ANN model, but the performance of the ANN model does not improve a lot.

Then, I watch some cases online and repeat cases in matlab to find that similar ANN model can perform well in these cases.

Afterwards, I use the codes in matlab to apply into my data, the results are not good. I have adjust every parameter of the model, but none of them works well.

The best performance of the ANN model is shown below. It is the comparison plot of the first parameter, namely the first amplitude. The blue scatter points are original data. The red lines are prediction data.

Now, I am pretty sure that the problem is the data.

1. I should find more predictors (logging data) which are related to T2 distribution.

2. The 6 individual parameters I get from gaussian distributions fluctuate very randomly. So maybe it should be transformed into some other forms so that they do not fluctuate so much.

Tomorrow, I will try in the two ways to improve my data. There should be no problem of the model.

Summary:

Today, I first try the methods in papers and online to improve the ANN model, but the performance of the ANN model does not improve a lot.

Then, I watch some cases online and repeat cases in matlab to find that similar ANN model can perform well in these cases.

Afterwards, I use the codes in matlab to apply into my data, the results are not good. I have adjust every parameter of the model, but none of them works well.

The best performance of the ANN model is shown below. It is the comparison plot of the first parameter, namely the first amplitude. The blue scatter points are original data. The red lines are prediction data.

Now, I am pretty sure that the problem is the data.

1. I should find more predictors (logging data) which are related to T2 distribution.

2. The 6 individual parameters I get from gaussian distributions fluctuate very randomly. So maybe it should be transformed into some other forms so that they do not fluctuate so much.

Tomorrow, I will try in the two ways to improve my data. There should be no problem of the model.

3/08/2017

find some papers and read one of them

Today, I found some papers and read one of them. I found some perspectives which could be useful for my research.

Summary:

The paper is called "methods to improve neural network performance in suspended sediment estimation".

months ago, I have searched ANN application in petroleum industry for several times, but they all just introduce ANN superficially and not in detail. So I decide to search for papers about ANN in other areas. As long as they explain ANN in detail and introduc methods to improve ANN prediction performance, it will be helpful for my research.

In the paper, it compares several models to do prediction:

Summary:

The paper is called "methods to improve neural network performance in suspended sediment estimation".

months ago, I have searched ANN application in petroleum industry for several times, but they all just introduce ANN superficially and not in detail. So I decide to search for papers about ANN in other areas. As long as they explain ANN in detail and introduc methods to improve ANN prediction performance, it will be helpful for my research.

In the paper, it compares several models to do prediction:

RDNN:

range-dependent neural network

ANN:

artificial neural network

LR: naïve linear

regression

RDLR (RLR):

range-dependent multi-linear regression

In conclusion, RDNN performs best, it sets input data and output data into three subsets and predict each set with a different model, with every set a smaller scale compared with the entire one.

Second, it said we should set the best number of neurons and hidden layers, but it does not say how to do it.

Tomorrow, I will continue to try the two methods for my research. If they do not work, I will read more papers.

3/07/2017

several algorithms to predict 6 parameters

Today, I learned several algorithms to predict 6 parameters.

Summary:

Summary:

Training functions:

Levenberg-Marquardt:

the combination of Gradient Descent and Gauss-Newton, when mu is small, it is

closer to Gauss-Newton, when mu is big, it is closer to Gradient Descent. It is

sensitive to local minimum.

Bayesian

Regularization: for over fitting problem.

Quasi-Newton:

better than Gradient Descent.

Backpropagation:

supervised learning method

Conjugate

Gradient: good for large numbers of predictors and observations, both linear

and non-linear.

Gradient

Descent: sensitive to local minimum.

Gauss-Newton:

good for total minimum, good convergence.

The following are their results.

LM:

Bayesian Regularization:

Quasi-Newton:

Backpropagation:

Conjugate Gradient:

Gradient Descent:

From the above 6 algorithms, we can see that Backpropagation is the best one, but it is still not good enough. Since the results are not good, I did not plot 6 subplots (500 observations individually).

Tomorrow, I will try to find methods to improve them.

Subscribe to:

Comments (Atom)